Jason Wu / Allion Labs

With the rapid development of voice assistant in recent years, sales of smart speakers are growing explosively every year, not only in Europe and America, but also in Asia. Typically, in order to achieve a consistent user experience, every smart speaker will be validated by the performance of the voice assistant before being launched to market. However, there are still incorrect recognition issues when users are using them. What factors are causing these issues? In this article, we are going to explore these issues from different ways.

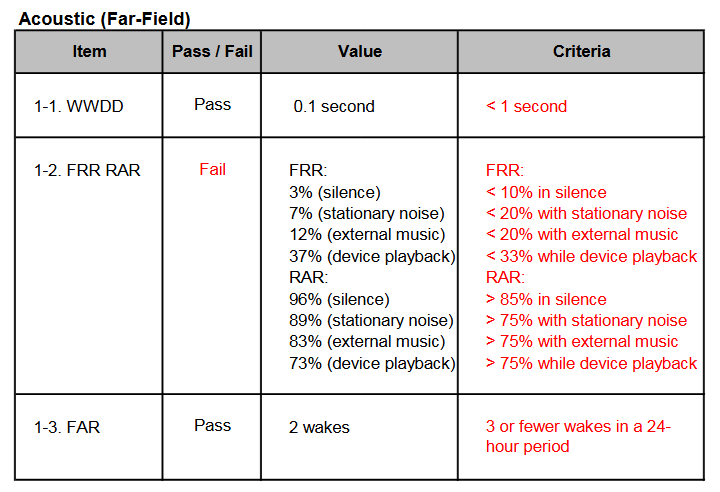

First, let’s take a look at the smart speakers with Amazon Alexa support. The smart speaker needs to pass the verification project. Allion labs is now available to assist vendors to validate their product and prepare the self-test results for applying Amazon Alexa Built-in logo. Here are the four performance check points for Amazon Alexa:

- FRR (Wake-Word False Rejection Rate)

This is to verify whether the product can wake-up properly each time by playing pre-recorded test utterances.

- RAR (Response Accuracy Rate)

This is to verify whether the product can respond a correct feedback each time by playing pre-recorded test utterances.

- WWDD (Wake Word Detection Delay)

In order to achieve a real-time response and avoid a poor experience on waiting for response, this verification checks the time between the wake-word and the commands.

- FAR (Wake-Word False Alarm Rate)

Usually when the smart speaker is plugged in, it remains on full-time standby. However, while users talk, they don’t avoid using wake-words during their conversation. Or users may say words that are similar to the pronunciation of wake-word. The verification checks the product does not get activated accidentally during a long conversation.

Figure 1: Amazon Alexa Certification Test Report Provided by Allion

Potential risks

In general, after passing the above performance verification, the product can be said to have a good speech recognition quality, but also with market-oriented conditions. But the truth is often not so ideal! Usually users bring the smart speaker home, after a simple set-up process, starts to enjoy a virtual assistant who will help to assist the life trivia, help to provide answers to the problem. They ask several questions or request some commands, but found that the smart speaker doesn’t complete its jobs. What’s going on here? In our experience, smart speakers usually have several performance potential risks:

- Can’t be activated

- Incorrect response

- Woke up without any request

- Slow response

- Network online instability

- Poor sound quality

Solutions

The standard performance verification is based on a fixed number of test utterances, through different distances, with different angles, and several interference noises to verify whether the product can pass the verification. We all know that time is money; shortening product development and verification time, the sooner the product is launched to market, the quicker companies can start to receive profits. Therefore, the testing scale of verification is usually limited to a relatively short period of time and relatively confined conditions. And it’s the key whether vendors will receive customer complaints, or even product return. To help vendors find out such problems before the product is launched.

Allion lab proposed more validation check points to help vendors verify the performance of smart speaker:

- More types and quantities of utterance

- More voice accents and tones

- More distance shifts

- More environmental noise

- Different decoration materials (glass, curtains, shutters, books, TV …)

- Different location simulation in home environment (wall, window, TV cabinet, coffee table, desk, bedside table …)

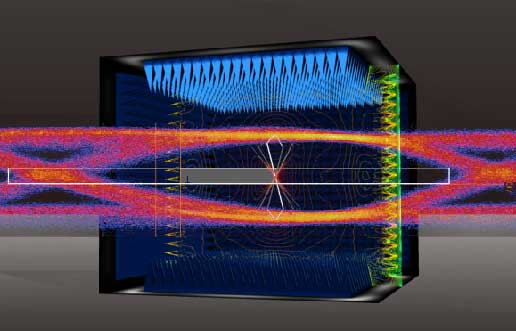

Figure 2: Test environment for speech recognition

Case Study and Test Results

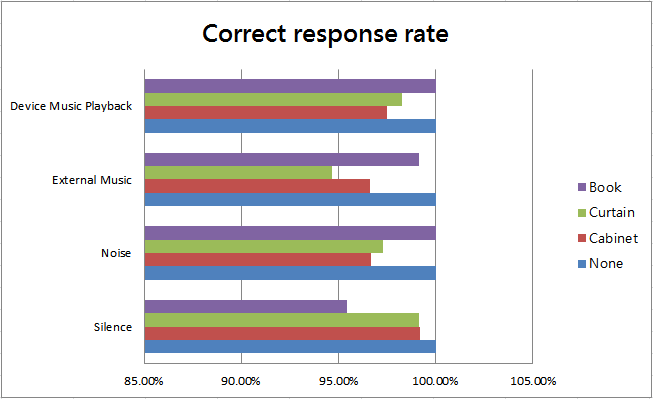

Let’s take a look at the data for an experiment. We used the Amazon Echo as a target of experiments, testing them under different barrier conditions:

Obstruction: unobstructed, by the cabinet, by the curtains, by the book

Speaking Distance: 1m, 3m

Test Speech: English, 3 men and 3 women voices, a total of 480 Sentences

Environmental/Background Noise: 4 Types

Figure 3: Test results for simulating home environments

In a general performance verification, in order to quantify the results of the test, it usually uses the ideal placement to perform the recognition verification. But after users bring it home, due to the different conditions of home decoration, furniture placement locations, as well as the different placement locations of smart speaker, have greatly affected the performance of the product. This part is usually not being validated in the previous performance verification, so it is likely to lead to consumer complaints.

Based on the previous results, in addition to assisting vendors to obtain the voice assistant certification, Allion also designed a variety of simulations for different home layout conditions and scenes to verify the effect of smart speaker placement, and thus help vendors understand what kind of issues their product may face and how to solve them.